Teneo: a new paradigm for data monetization

- Bert van Kerkhoven

- Feb 7, 2025

- 5 min read

The greatest heist in human history

Over the past two decades, we saw the meteoric rise of the internet with the total internet population going from just 1 billion in 2005 to over 5.5 billion today. 5.22 billion of those internet users are active on social media. This mega-trend reshaped the entire fabric of our society, radically accelerating how knowledge and culture forms and permeates.

On the back-end of this trend, we witnessed an unprecedented asymmetry of value extraction in the form of data. Given the novelty of the web, this extraction was largely unnoticed by the general public. Every social interaction, product review, search query — and even the metadata attached to it- is the predominant resource that powers trillion-dollar industries.

The foundation of modern artificial intelligence, algorithmic trading, and precision marketing has been constructed on the data generated by billions of users — yet the financial surplus generated from this knowledge economy has been captured by a small group of technology monopolies.

This was not an unintended consequence of Web2.It was the business model of Web2.

The data value chain

If data is the new oil, big tech firms like Meta, Google, X & Reddit are the new OPEC. Having seized proprietary control of user-generated content without explicit content, they have pushed the boundaries of value extraction by squeezing every possible signal out of this data. This doesn’t only extend to logged in users, external data aggregation enables shadow profiling for any website visitor.

The shadowy data economy is not constrained to the platforms the data is generated on. There is an entire industry built on data brokerage. Large-scale data brokerage networks package consumer, financial, and social sentiment data into sellable products for third parties.

Datasets originating from real-time social media feeds are the input for natural language processing engines that power sentiment analysis feeds for hedge funds.

Advertising networks use the data to build psychometric profiles on potential customers to optimize targeting and manipulate them into buying with high precision.

Since the business model of social networks hinges on the number of ad impressions and thus time spent on these platforms, there is an incentive to addict users which typically doesn’t align with their well-being (The Social Dilemma by director Jeff Orlowski illustrated this quite well).

Cambridge Analytica, a British political consulting firm, came under scrutiny for the alleged misappropriation of user data with the goal to swing the Brexit referendum in favor of the Leave camp. Further research into the matter highlighted that Cambridge Analytica wasn’t special at all. Your online data footprint is used to polarize, radicalize and ultimately impact elections.

The rise of AI

In late 2022, LLMs went mainstream with the launch of ChatGPT by OpenAI. While not publicly disclosed, it is widely assumed that OpenAI used petabytes of scraped data from public sources. Some authors and organizations (such as George R.R. Martin & The New York Times) claim OpenAI used copyrighted material and took legal action against OpenAI. Meanwhile, platforms that have extracted and monetized user-generated data for years are now complaining that OpenAI violated fair use policies. While these law suits are unprecedented and the law is quite ambiguous & formulated in an antiquated way, every platform is taking action against mass scraping of the content they host:

X has imposed exorbitant API fees, making real-time sentiment analysis prohibitively expensive for smaller firms.

Reddit’s API restrictions have eliminated cost-effective access to structured discussion datasets, previously used for AI fine-tuning.

Google and Meta now closely guard proprietary engagement datasets, restricting their use to internal product development.

Across the board, websites are now updating their robot.txt files to prevent data scraping. Additionally, increasingly sophisticated techniques such as honeypot links, dynamic content loading and IP fingerprinting are being used to deter automated data extraction.

The impact of LLMs trained by user-generated content is a lot bigger for the general public than any of the earlier use cases for this data. While this data was previously mainly utilised to impact the consumer side of the equation, the drastic impact AI could have on the production side of our economy puts the discussion around data usage and monetization at the center stage. Open-source communities such as Stack Overflow, once the backbone of software development knowledge exchange, are now being replaced by AI-driven code generation tools trained on their own knowledge base. When the quality of the output of these tools reaches a level that allows for large-scale job displacement, we can expect awareness regarding data to spike.

The marginal value of data

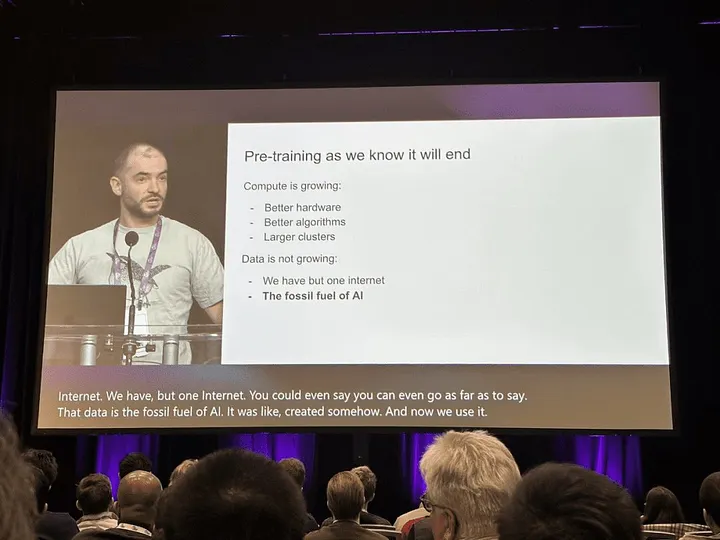

While there are a lot of disagreements amongst the leaders of AI labs (e.g. the debate between Musk & Altman around open vs closed source AI), one thing that they seem to agree on is that we’re running out of data to train foundational models.

There is some merit to these claims with regards to foundational models. However, the introduction of DeepSeek R1 marked a Sputnik moment for the LLM arms race. It showcased that foundational models are not defensible and as such, value accrual is likely to take place at the application layer where foundational models are fine-tuned to cater to specific use-cases.

In this new paradigm, the critical component shifts entirely from engineering to the data input. More specifically, it’s the highly specialised real-time data that unlocks applications that were previously beyond reach.

An obvious example of specialized data is the data that is generated on social networks such as X. Whether it’s finance, politics or emergencies, X always has the most recent data — providing high signal that could be a great basis to fine-tune models.

The need for this data arises at a time when platforms have already imposed rate limits on the usage of their platforms. Pricing for Enterprise API access to X is estimated between $42,000 and $210,000 (for 50m and 200m tweets respectively) per month.

Enter Teneo: the permissionless data layer

Teneo users install lightweight Community Nodes, which act as decentralized data collectors, aggregating real-time insights from social platforms such as X.

Instead of relying on centralized corporate APIs, businesses can query Teneo’s permissionless, censorship-resistant data streams. This allows them to legally and securely bypass even the most sophisticated traps and blocks that are put in place to prevent automated scraping.

Teneo levels the playing field by providing access to real-time X data at a price 90% lower compared to the API pricing. Democratized access to this data could tip the scales in favor of a future where the transformative technology is freely accessible for everyone.

Rather than allowing corporations to extract behavioral data without compensation, Teneo aligns incentives by rewarding contributors with $TENEO tokens, creating a direct economic relationship between data producers and data consumers.

For the first time, a decentralized data economy ensures that users are not just passive participants in the AI revolution, but direct economic beneficiaries.

Disclaimer: MN Capital is an investor in Teneo

Comments